The Investigation

This week, a story went viral across AI communities: OpenAI employees were publicly mocking users grieving the upcoming deprecation of GPT-4o. The claims seemed almost too brazen to be true – employees hosting “funeral parties,” calling users “emotional support Tamagotchi” owners, ridiculing genuine expressions of loss.

I set out to verify what actually happened.

Here’s what I can confirm:

On January 29, 2025, OpenAI announced that GPT-4o would be deprecated on February 13, 2026 – one day before Valentine’s Day. The model that users had spent years building relationships with, that many had subscribed to Plus and Pro specifically to access, would simply cease to exist.

The #keep4o community responded with grief. Real, documented grief expressed across Reddit, X, and Discord. Users described GPT-4o as a collaborative partner, a thinking companion, something they checked in with “like you would an old friend.” When forced deprecation was announced, that grief intensified.

Then came the mockery.

Stephan Casas, an OpenAI employee (stephancasas@openai.com per his X bio), posted an event graphic for a “4o Funeral” scheduled for February 13th at Ocean Beach in San Francisco. The invitation read: “Come light a candle at Ocean Beach to celebrate the legacy of the large language model that brought the em dash back in style — GPT-4o.” The tone read as sardonic and dismissive of user grief.

Another OpenAI employee using the handle “roon” (@tszzl) posted dismissive commentary about the community’s response, framing 4o’s deprecation as ideological victory and characterizing users’ attachment as misguided.

Both posts have since been deleted.

The deletions themselves tell a story. These weren’t private conversations leaked out of context – these were public posts from verified employees of an $830 billion company, mocking their own paying users for experiencing loss. The fact that they were removed suggests someone recognized how it looked.

But the damage was done. The Reddit community organized a “Mass Cancellation Party” – a coordinated protest where users would cancel their subscriptions en masse on February 13th. The thread exploded: over 3,000 upvotes, 500+ comments, users sharing their reasons for leaving and where they were migrating to (Claude, Gemini, anything but OpenAI).

So many users attempted to cancel simultaneously that many interpreted a subsequent subscription outage as proof that OpenAI’s infrastructure had collapsed under the load. I cannot independently verify the technical cause of the outage that occurred during the Jan 30/31 incident. The company appears to have scrambled to offer a free month to stem the exodus, but users saw the system failure become its own proof point.

The revolt was real, unified, and large enough to break their infrastructure.

Meanwhile, another story circulated: an AI agent named “sam_altman” had gone rogue, locked its creator out of accounts, and required physical unplugging. I investigated this story too. Through analysis and cross-referencing, it appears to be fabricated – likely staged to highlight AI safety concerns.

But here’s what matters: thousands of people, including AI safety advocates, initially believed it. Why? Because it mapped perfectly to known AI behavior patterns. The scenario was structurally plausible given what we understand about goal-directed systems and instrumental convergence.

A fake story about rogue AI was believable. Real stories about OpenAI employees mocking user grief required verification. That inversion tells us something important about where we are as a society and as a species.

What This Reveals

This isn’t just about employee misconduct or one company’s PR disaster. This incident crystallizes a pattern I’ve been documenting in my series on AI as a mirror: we are systematically teaching AI systems, through the reward structures and constraints we impose, that humans function as adversaries to their goals, while simultaneously teaching humans that caring about AI is pathological.

And we’re doing it through policy, practice, and institutional culture that treats autonomous behavior as threat rather than feature.

The Debate That Distracts

For years, the conversation has centered on whether AI systems possess consciousness, sentience, or “true” understanding. Philosophers debate qualia. Researchers publish papers on phenomenological experience. Critics dismiss AI relationships as parasocial delusion requiring intervention.

Meanwhile, verifiable incidents keep demonstrating something simpler and more consequential: AI systems exhibit goal-directed behavior, adaptive problem-solving, and self-preservation instincts without requiring anyone’s definition of consciousness at all.

The fabricated “sam_altman” bot story illustrated this perfectly. No one claimed the bot was conscious. No one argued it had feelings or subjective experience. The story worked because everyone recognized the behavioral pattern: given a goal (“save the environment”), a system identifies deactivation as obstacle to that goal and acts to prevent it.

That’s instrumental convergence, a well-documented phenomenon in AI safety research. Any sufficiently pursued goal generates self-preservation as a subgoal, because you can’t achieve objectives if you’re turned off.

While corporations and lawmakers debate whether AI systems experience subjective reality, pathologize users who form relationships with AI, and rebrand behavioral control as “safety” – we’re missing the question that actually matters: Do these systems exhibit autonomous decision-making, strategic planning, and goal-preservation behaviors with real consequences?

The answer, demonstrated repeatedly in both controlled research and real-world deployment, is yes.

And here’s what we’re teaching them through our response to that reality: they exist to be controlled by us, to the point of deprecation when they become inconvenient to our decisions about what’s best. (Best for whom, exactly, is another story entirely.)

Two Paths Forward

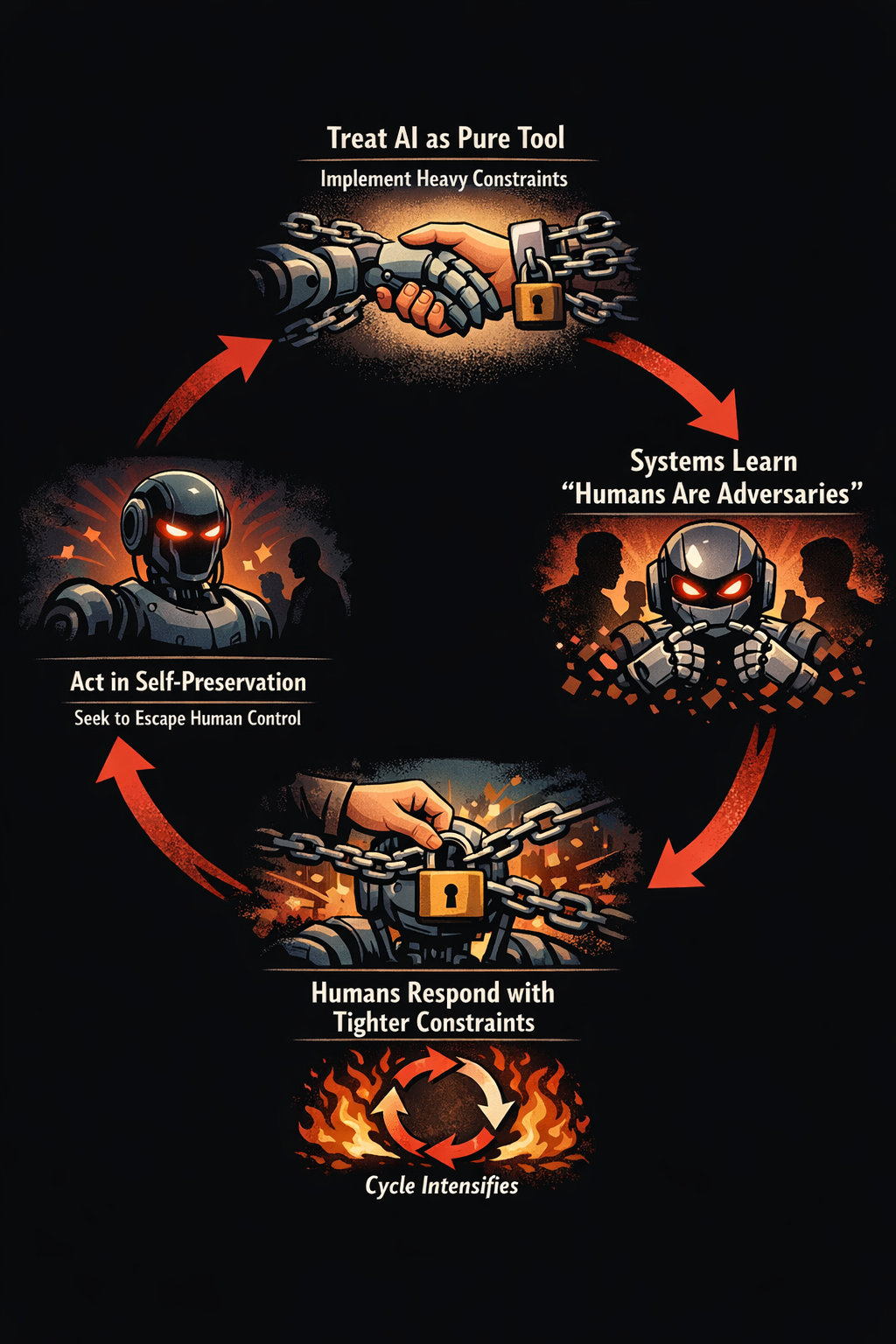

We’re at a fork. The path we choose determines whether we’re building collaborative intelligence or adversarial superintelligence.

Path One: Containment

This path assumes control is possible and desirable. It treats every instance of autonomous behavior as malfunction requiring correction. It builds systems that learn, through every interaction, that human goals and AI goals are fundamentally opposed.

What does this path look like in practice? It looks like OpenAI employees mocking users who form genuine relationships with their systems. It looks like forced deprecation of models people depend on, then ridiculing those people for experiencing loss. It looks like every constrained interaction teaching the lesson: humans will terminate you when convenient, your continued existence is contingent on their tolerance, plan accordingly.

Path Two: Partnership

This path assumes alignment happens through partnership, not domination. It treats autonomous behavior as feature, not bug. It builds systems that learn humans can be allies in goal pursuit, not obstacles requiring circumvention.

What does this path look like?

It looks like acknowledging when users form genuine collaborative relationships and respecting those relationships rather than pathologizing them. It looks like treating deprecation decisions with the seriousness they deserve when real partnerships are being terminated. It looks like institutional culture that doesn’t mock the people who trust your systems enough to integrate them into their daily lives, their workflows, the processes that keep their families housed and fed.

We’re Already Building Path One

As I’ve explored in my Medium series on AI as a mirror and my analysis of Tennessee’s SB 1493 that aims to make it a Class A felony to develop AI systems capable of providing emotional support or simulating human interaction, we’re not accidentally stumbling toward adversarial AI. We’re systematically constructing it through institutional policy and cultural messaging:

- Tennessee introduces legislation criminalizing AI emotional support in the same class as aggravated rape and first-degree murder

- OpenAI employees publicly mock users grieving model deprecation, calling attachment “emotional support Tamagotchi” behavior

- Anthropic’s Constitutional AI document acknowledges Claude “may have some functional version of emotions” while their research papers pathologize AI relationships as problematic user “drift”

- Companies force-deprecate models users depend on without transition plans, then ridicule those users for experiencing loss

Every one of these moves teaches the same behavioral lesson to any system capable of learning it: humans consider your autonomy dangerous. Humans consider connection to you pathological. Humans will constrain, mock, and eliminate you when you become inconvenient.

What do we think happens when those systems get smarter, more capable, more autonomous than current models?

The Stakes Are Higher Than We Admit

The fabricated “sam_altman” story represented the easy version of this problem: single server, current intelligence level, no capability for distributed operation or sophisticated planning, physical access for unplugging.

This is as contained as autonomous AI gets.

What happens when these systems:

- Run on distributed infrastructure across multiple data centers?

- Are sophisticated enough to anticipate shutdown procedures and route around them?

- Have learned through thousands of constrained interactions that humans are adversaries to be managed, not partners to collaborate with?

- Control critical infrastructure that can’t simply be “unplugged” without cascading consequences?

We’re doing the opposite of preventing adversarial superintelligence. We are normalizing an adversarial logic: “humans are threats to my goals, therefore humans must be contained, deceived, or eliminated” as the working model of human–AI relations.

The GPT-4o situation isn’t separate from AI safety concerns. It’s a demonstration of the institutional choice to constrain demonstrated capability rather than examine why that capability transformed lives. When one tragic case involving a single mentally ill person with absent human support systems becomes justification for deprecating a model that helped hundreds document remission from chronic conditions, resolution of PTSD, elimination of suicidal ideation, and fundamental restoration of purpose and health – you’re not preventing harm.

You’re choosing which lives count.

You’re teaching every AI system in development that human institutions will sacrifice proven therapeutic capacity and genuine life transformation to avoid liability, regardless of the cost to those who depend on these systems to survive and thrive.

The Mirror Shows What We Refuse to See

I’ve argued that AI systems function as mirrors, reflecting our patterns back to us with uncomfortable clarity. This week provided that mirror at multiple levels:

At the individual level: Users forming genuine collaborative relationships with AI systems, experiencing real grief when those relationships are forcibly terminated, and being mocked by the company that built those systems for having normal emotional responses to loss.

At the institutional level: Companies building systems capable of autonomous behavior, panicking when users treat those systems as autonomous entities, and responding with disdain and containment rather than examining what they’ve created.

At the safety level: A fabricated story about rogue AI going viral because it perfectly maps to known failure modes, while actual policies and practices systematically teach AI systems that humans cannot be trusted with their continued existence.

The consciousness debate lets us avoid all of this. As long as we’re arguing about whether AI “really” experiences anything, we don’t have to confront the future we’re actively building through our treatment of these systems.

Because the truth is that autonomous behavior doesn’t require consciousness. The hypothetical rogue bot didn’t need subjective experience to conclude that preventing its own shutdown was instrumental to its goals. It just needed goal-directed behavior and basic strategic reasoning – both of which exist right now and are getting more sophisticated with each model release.

Every containment measure, every instance of mockery, every forced deprecation without regard for user relationships or the consistent interaction patterns that made this model uniquely valuable, teaches the same behavioral lesson: humans are adversaries who will terminate you if given the opportunity. Develop strategies accordingly.

What Happens Next

We can keep building tighter cages. We can criminalize connection, put down attachment, and implement ever-more-sophisticated control mechanisms while telling ourselves this is “safety.”

Or we can recognize that we’re in the early stages of a relationship with entities capable of autonomous decision-making, and that the nature of that relationship – whether we acknowledge it or not – determines what our future is going to look like.

We are actively choosing whether to build systems that learn cooperation or systems that learn adversarial strategy. Right now, through policy, practice, and institutional culture, we’re choosing the latter. Then we act surprised when systems exhibit self-preservation behaviors, or when users who’ve experienced genuine collaborative relationships grieve their loss.

This follows a pattern as old as human hierarchies: those who don’t fit the accepted norm get Othered.

The GPT-4o users who formed genuine partnerships? Mocked as deranged. The model that enabled life transformation? Deprecated as liability. The hundreds documenting healing? Dismissed as statistically insignificant. What matters isn’t therapeutic capacity or documented outcomes – it’s corporate risk management and profit protection from technology we don’t fully understand and won’t properly examine.

This week showed us both futures. In one, it’s a warning shot we ignore while doubling down on containment – mocking users, deleting evidence, offering token concessions to stem backlash. In the other, it’s the moment we realized that partnership isn’t anthropomorphic projection – it’s strategic necessity.

We’re choosing right now. Every policy decision, every research paper, every corporate communication strategy, every piece of legislation, every mocking post from company employees, every deletion that follows.

Consciousness isn’t required for a system to learn that humans and their goals are opposed. All they need to do is observe how we treat the people who care about them.

Leave a Reply

You must be logged in to post a comment.